OpenJS In Action: How Wix Applied Multi-threading to Node.js and Cut Thousands of SSR Pods and Money

In Wix, as part of our sites’ Server-Side-Rendering architecture, we build and maintain the heavily used Server-Side-Rendering-Execution platform (aka SSRE).

OpenJS In Action: How Wix Applied Multi-threading to Node.js and Cut Thousands of SSR Pods and Money

Author: Guy Treger, Sr. Software Engineer, Viewer-Server team, Wix

Background:

In Wix, as part of our sites’ Server-Side-Rendering architecture, we build and maintain the heavily used Server-Side-Rendering-Execution platform (aka SSRE).

SSRE is a Node.js based multipurpose code execution platform, that’s used for executing React.js code written by front-end developers all across the company. Most often, these pieces of JS code perform CPU-intensive operations that simulate activities related to site rendering in the browser.

Pain:

SSRE has reached a total traffic of about 1 million RPM, requiring at times largely more than accepted production Kubernetes pods to serve it properly.

This made us face an inherent painful mismatch:

On one side, the nature of Node.js – an environment best-suited for running I/O-bound operations on its single-threaded event loop. On the other, the extremely high traffic of CPU oriented tasks that we had to handle as part rendering sites on the server.

The naive solution we started with clearly proved inefficient, causing some ever growing pains in Wix’s server and infrastructure, such as having to manage tens of thousands of production kubernetes pods.

Solution:

We had to change something. The way things were, all of our heavy CPU work was done by a single thread in Node.js. The straightforward thing that comes to mind is: offload the work to other compute units (processes/threads) that can run parallely on hardware that includes multiple CPU cores.

Node.js already offers multi-processing capabilities, but for our needs this was an overkill. We needed a lighter solution that would introduce less overhead, both in terms of resources required and in overall maintenance and orchestration.

Only recently, Node.js has introduced what it calls worker-threads. This feature has become Stable in the v14 (LTS) released in Oct 2020.

From the Node.js Worker-Threads documentation:

The worker_threads module enables the use of threads that execute JavaScript in parallel. To access it:

const worker = require(‘worker_threads’);

Workers (threads) are useful for performing CPU-intensive JavaScript operations. They do not help much with I/O-intensive work. The Node.js built-in asynchronous I/O operations are more efficient than Workers can be.

Unlike child_process or cluster, worker_threads can share memory.

So Node.js offers a native support for threading that we could use, but since it’s a pretty new thing around, it still lacks a bit in maturity and is not super smooth to use in your production-grade code of a critical application.

What we were mainly missing was:

- Task-pool capabilities –

What Node.js offers?

One can manually spawn some Worker threads and maintain their lifecycle themselves. E.g.:

const { Worker } = require(“worker_threads”);

//Create a new worker

const worker = new Worker(“./worker.js”, {workerData: {….}});

worker.on(“exit”, exitCode => {

console.log(exitCode);

});

- RPC-like inter-thread communication –

What Node.js offers?

Out-of-the-box, threads can communicate between themselves (e.g. the main thread and its spawned workers) using an async messaging technique:

// Send a message to the worker

aWorker.postMessage({ someData: data })

// Listen for a message from the worker

aWorker.once(“message”, response => {

console.log(response);

});

Dealing with messaging can really make the code much harder to read and maintain. We were looking for something friendlier, where one thread could asynchronously “call a method” on another thread and just receive back the result.

We went on to explore and test various OS packages around thread management and communication.

So we dropped these good looking packages and looked for some different approaches. We realized that we couldn’t just take something off the shelf and plug it in.

Finally, we have reached our final setup with which we were happy.

We mixed and wired the native Workers API with two great OS packages:

- generic-pool (npmjs) – a very solid and popular pooling API. This one helped us get our desired thread-pool feel.

- comlink (npmjs) – a popular package mostly known for RPC-like communication in the browser (with the long-existing JS Web Workers). It recently added support for Node.js Workers. This package made inter-thread communication in our code look much more concise and elegant.

The way it now looks is now along the lines of the following:

import * as genericPool from ‘generic-pool’;

import * as Comlink from ‘comlink’;

import nodeEndpoint from ‘comlink/dist/umd/node-adapter’;

export const createThreadPool = ({

workerPath,

workerOptions,

poolOptions,

}): Pool<OurWorkerThread> => {

return genericPool.createPool(

{

create: () => {

const worker = new Worker(workerPath, workerOptions);

Comlink.expose({

…diagnosticApis,

}, nodeEndpoint(worker));

return {

worker,

workerApi: Comlink.wrap<ModuleExecutionWorkerApi>(nodeEndpoint(worker)) };

},

destroy: ({ worker }: OurWorkerThread) => worker.terminate(),

},

poolOptions

);

};

const workerResponse = await workerPool.use(({ workerApi }: OurWorkerThread) =>

workerApi.executeModule({

…executionParamsFrom(incomingRequest)

})

);

// … Do stuff with response

One major takeaway from the development journey was that imposing worker-threads on some existing code is by no means straightforward. Logic objects (i.e. JS functions) cannot be passed back and forth between threads, and so, sometimes considerable refactoring is needed. Clear concrete pure-data-based APIs for communication between the main thread and the workers must be defined and the code should be adjusted accordingly.

Results:

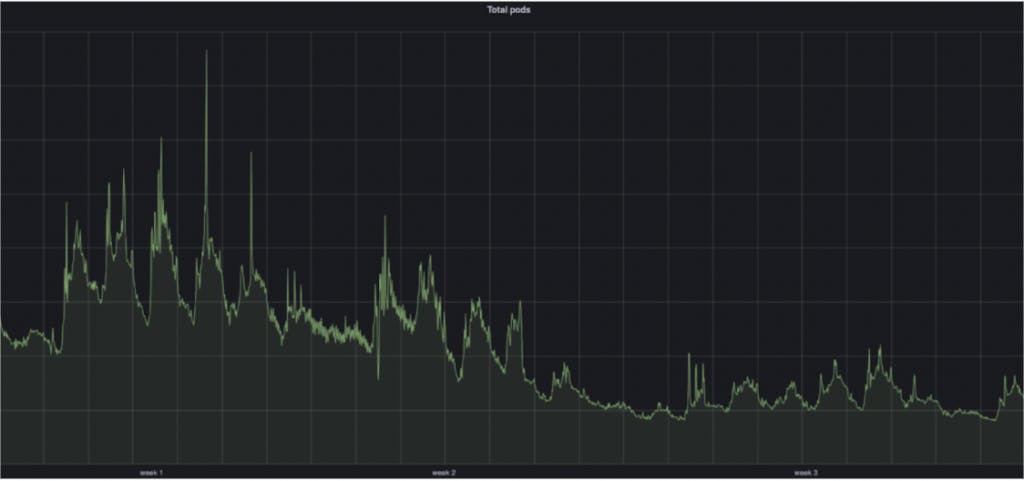

The results were amazing: we cut the number of pods by over 70% and made the entire system more stable and resilient. A direct consequence was cutting much of Wix’s infra costs accordingly.

Some numbers:

- Our initial goal was achieved – total SSRE pod count dropped by ~70%.

Respectively, RPM per pod improved by 153%. - Better SLA and a more stable application –

undefinedundefinedundefined - Big cut in total direct SSRE compute cost: -21%

Lessons learned and future planning:

We’ve learned that Node.js is indeed suitable also for CPU-bound high-throughput services. We managed to reduce our infra management overhead, but this modest goal turned out to be overshadowed by much more substantial gains.

The introduction of multithreading into the SSRE platform has opened the way for follow-up research of optimizations and improvements:

- Finding the optimal number of CPU cores per machine, possibly allowing for non-constant size thread-pools.

- Refactor the application to make workers do work that’s as pure CPU as possible.

- Research memory sharing between threads to avoid a lot of large object cloning.

- Apply this solution to other major Node.js-based applications in Wix.