How The Weather Company uses Node.js in production

Using Node.js improved site speed, performance, and scalability

How The Weather Company uses Node.js in production

Using Node.js improved site speed, performance, and scalability

This piece was written by By Noel Madali and originally appeared on the IBM Developer Blog. IBM is a member of the OpenJS Foundation.

The Weather Company uses Node.js to power their weather.com website, a multinational weather information and news website available in 230+ locales and localized in about 60 languages. As an industry leader in audience reach and accuracy, weather.com delivers weather data, forecasts, observations, historical data, news articles, and video.

Because weather.com offers a location-based service that is used throughout the world, its infrastructure must support consistent uptime, speed, and precise data delivery. Scaling the solution to billions of unique locations has created multiple technical challenges and opportunities for the technical team. In this blog post, we cover some of the unique challenges we had to overcome when building weather.com and discuss how we ended up using Node.js to power our internationalized weather application.

Drupal ‘n Angular (DNA): The early days

In 2015, we were a Drupal ‘n Angular (DNA) shop. We unofficially pioneered the industry by marrying Drupal and Angular together to build a modular, content-based website. We used Drupal as our CMS to control content and page configuration, and we used Angular to code front-end modules.

Front-end modules were small blocks of user interfaces that had data and some interactive elements. Content editors would move around the modules to visually create a page and use Drupal to create articles about weather and publish it on the website.

DNA was successful in rapidly expanding the website’s content and giving editors the flexibility to create page content on the fly.

As our usage of DNA grew, we faced many technical issues which ultimately boiled down to three main themes:

- Poor performance

- Instability

- Slower time for developers to fix, enhance, and deploy code (also known as velocity)

Poor performance

Our site suffered from poor performance, with sluggish load times and unreliable availability. This, in turn, directly impacted our ad revenue since a faster page translated into faster ad viewability and more revenue generation.

To address some of our performance concerns, we conducted different front-end experiments.

- We analyzed and evaluated modules to determine what we could change. For example, we evaluated getting rid of some modules that were not used all the time or we rewrote modules so they wouldn’t use giant JS libraries.

- We evaluated our usage of a tag manager in reference to ad serving performance.

- We added lazy-loaded modules to remove the module on first load in order to reduce the amount of JavaScript served to the client.

Instability

Because of the fragile deployment process of using Drupal with Angular, our site suffered from too much downtime. The deployment process was a matter of taking the name of a git branch and entering it into a UI to get released into different environments. There was no real build process, but only version control.

Ultimately, this led to many bad practices that impacted developers including lack of version control methodology, non-reproduceable builds, and the like.

Slower developer velocity

The majority of our developers had front-end experience, but very few them were knowledgeable about the inner workings of Drupal and PHP. As such, features and bug fixes related to PHP were not addressed as quickly due to knowledge gaps.

Large deployments contributed to slower velocity as well as stability issues, where small changes could break the entire site. Since a deployment was the entire codebase (Drupal, Drupal plugins/modules, front-end code, PHP scripts, etc), small code changes in a release could easily get overlooked and not be properly tested, breaking the deployment.

Overall, while we had a few quick wins with DNA, the constant regressions due to the setup forced us to consider alternative paths for our architecture.

Rethinking our architecture to include Node.js

Our first foray into using Node.js was a one-off project for creating a lite experience for weather.com that was completely server-side rendered and had minimal JavaScript. The audience had limited bandwidth and minimal device capabilities (for example, low-end smartphones using Facebook’s Free Basics).

Stakeholders were happy with the lite experience, commenting on the nearly instantaneous page loads. Analyzing this proof-of-concept was important in determining our next steps in our architectural overhaul.

Differing from DNA, the lite experience:

- Rendered pages as server side only

- Kept the front-end footprint under 30KB (virtually no JavaScript, little CSS, few images).

We used what we learned with the lite experience to help us and serve our website more performantly. This started with rethinking our DNA architecture.

Metrics to measure success

Before we worked on a new architecture, we had to show our business that a re-architecture was needed. The first thing we had to determine was what to measuring to show success.

We consulted with the Google Ad team to understand how exactly a high-performing webpage impacts business results. Google showed us proof that improving page speed increases ad viewability which translates to revenue.

With that in hand, each day we conducted tests across a set of pages to measure:

- Speed index

- Time to first interaction

- Bytes transferred

- Time to first ad call

We used a variety of tools to collect our metrics: WebPageTest, Lighthouse, sitespeed.io.

As we compiled a list of these metrics, we were able to judge whether certain experiments were beneficial or not. We used our analysis to determine what needed to change in our architecture to make the site more successful.

While we intended to completely rewrite our DNA website, we acknowledged that we needed to stair step our approach for experimenting with a newer architecture. Using the above methodology, we created a beta page and A/B tested it to verify its success.

From Shark Tank to a beta of our architecture

Recognizing the performance of our original Node.js proof of concept, we held a “Shark Tank” session where we presented and defended different ideal architectures. We evaluated whole frameworks or combinations of libraries like Angular, React, Redux, Ember, lodash, and more.

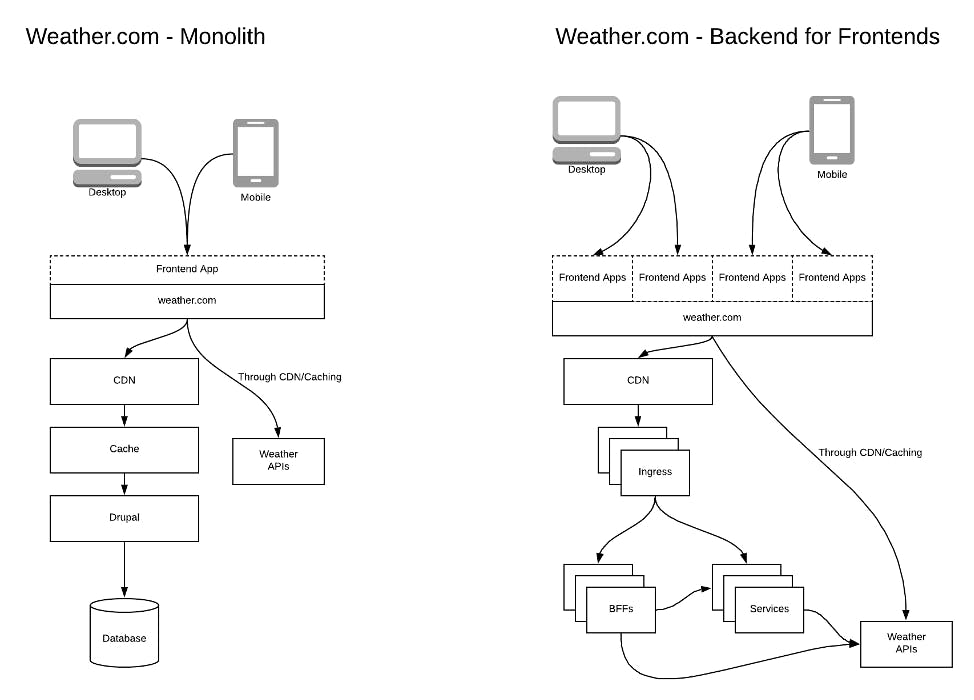

From this experiment, we collectively agreed to move from our monolithic architecture to a Node.js backend and newer React frontend. Our timeline for this migration was between nine months to a year.

Ultimately, we decided to use a pattern of small JS libraries and tools, similar to that of a UNIX operating system’s tool chain of commands. This pattern gives us the flexibility to swap out one component from the whole application instead of having to refactor large amounts of code to include a new feature.

On the backend, we needed to decouple page creation and page serving. We kept Drupal as a CMS and created a way for documents to be published out to more scalable systems which can be read by other services. We followed the pattern of Backends for Frontends (BFF), which allowed us to decouple our page frontends and allow for more autonomy of our backend downstream systems. We use the documents published by the CMS to deliver pages with content (instead of the traditional method of the CMS monolith serving the pages).

Even though Drupal and PHP can render server-side, our developers were more familiar with JavaScript, so using Node.js to implement isomorphic (universal) rendering of the site increased our development velocity.

Developing with Node.js was an easy focus shift for our previous front-end oriented developers. Since the majority of our developers had a primarily JavaScript background, we stayed away from solutions that revolved around separate server-side languages for rendering.

Over time, we implemented and evolved our usage from our first project. After developing our first few pages, we decided to move away from ExpressJS to Koa to use newer JS standards like async/await. We started with pure React but switched to React-like Inferno.js.

After evaluating many different build systems (gulp, grunt, browserify, systemjs, etc), we decided to use Webpack to facilitate our build process. We saw Webpack’s growing maturity in a fast-paced ecosystem, as well as the pitfalls of its competitors (or lack thereof).

Webpack solved our core issue of DNA’s JS aggregation and minification. With a centralized build process, we could build JS code using a standardized module system, take advantage of the npm ecosystem, and minify the bundles (all during the build process and not during runtime).

Moving from client-side to server-side rendering of the application increased our speed index and got information to the user faster. React helped us in this aspect of universal rendering–being able to share code on both the frontend and backend was crucial to us for server-side rendering and code reuse.

Our first launch of our beta page was a Single Page App (SPA). Traditionally, we had to render each page and location as a hit back to the origin server. With the SPA, we were able to reduce our hits back to the origin server and improve the speed of rendering the next view thanks to universal rendering.

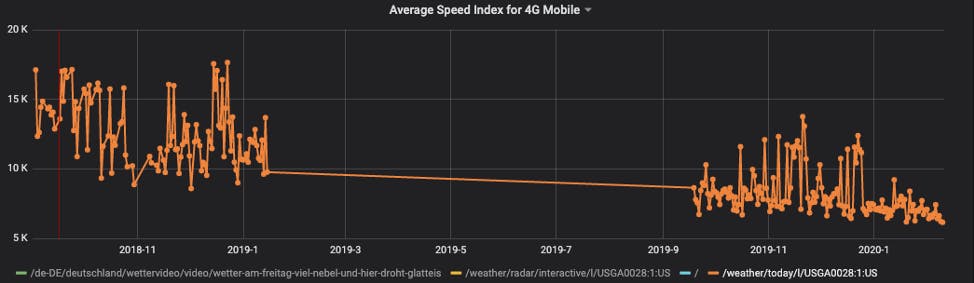

The following image shows how much faster the webpage response was after the SPA was introduced.

As our solution included more Node.js, we were able to take advantage of a lot of the tooling associated with a Node.js ecosystem, including ESLint for linting, Jest for testing, and eventually Yarn for package management.

Linting and testing, as well as a more refined CI/CD pipeline, helped reduce bugs in production. This led to a more mature and stable platform as a whole, higher engineering velocity, and increased developer happiness.

Changing deployment strategies

Recognizing our problems with our DNA deployments, we knew we needed a better solution for delivering code to infrastructure. With our DNA setup, we used a managed system to deploy Drupal. For our new solution, we decided to take advantage of newer, container-based deployment and infrastructure methodologies.

By moving to Docker and Kubernetes, we achieved many best practices:

- Separating out disparate pages into different services reduces failures

- Building stateless services allows for less complexity, ease of testing, and scalability

- Builds are repeatable (Docker images ensure the right artifacts are deployed and consistent) Our Kubernetes deployment allowed us to be truly distributed across four regions and seven clusters, with dozens of services scaled from 3 to 100+ replicas running on 400+ worker nodes, all on IBM Cloud.

Addressing a familiar set of performance issues

After running a successful beta experiment, we continued down the path of migrating pages into our new architecture. Over time, some familiar issues cropped up:

- Pages became heavier

- Build times were slower

- Developer velocity decreased

We had to evolve our architecture to address these issues.

Beta v2: Creating a more performant page

Our second evolution of the architecture was a renaissance (rebirth). We had to go back to the basics and revisit our lite experience and see why it was successful. We analyzed our performance issues and came to a conclusion that the SPA was becoming a performance bottleneck. Although SPA benefits second page visits, we came to an understanding that majority of our users visit the website and leave once they get their information.

We designed and built the solution without a SPA, but kept React hydration in order to keep code reuse across the server and client-side. We paid more attention to the tooling during development by ensuring that code coverage (the percentage of JS client code used vs delivered) was more efficient.

Removing the SPA overall was key to reducing build times as well. Since a page was no longer stitched together from a singular entry point, we split the Webpack builds so that individual pages can have their own set of JS and assets.

We were able to reduce our page weight even more compared to the Beta site. Reducing page weight had an overall impact on page load times. The graph below shows how speed index decreased.

Note: Some data was lost in between January through October of 2019.

This architecture is now our foundation for any and all pages on weather.com.

Conclusion

weather.com was not transformed overnight and it took a lot of work to get where we are today. Adding Node.js to our ecosystem required some amount of trial and error.

We achieved success by understanding our issues, collecting metrics, and implementing and then reimplementing solutions. Our journey was an evolution. Not only was it a change to our back end, but we had to be smarter on the front end to achieve the best performance. Changing our deployment strategy and infrastructure allowed us to achieve multiple best practices, reduce downtimes, and improve overall system stability. JavaScript being used in both the back end and front end improved developer velocity.

As we continue to architect, evolve, and expand our solution, we are always looking for ways to improve. Check out weather.com on your desktop, or for our newer/more performant version, check out our mobile web version on your mobile device.